Ceph on Fedora 16

Last time I complained about how much ceph tries to do for you. For better or worse, now it attempts to do more for you!

For my setup, I had 3 nodes in the HCC private cloud. First, we need to install ceph.

$ yum install ceph

Then, create a configuration file for ceph. The RPM comes with a good example that my configuration is based on. The example script is in /usr/share/doc/ceph/sample.ceph.conf

My configuration: Derek's Configuration

The configuration has the authentication turned off. I found this useful because the ceph-authtool (yes, the renamed it since Fedora 15) is difficult to use. And because all of the nodes are on a private vlan only reachable by my openvpn key :)

Then, you need to create and distribute ssh keys to all of your nodes so that the mkcephfs can ssh to them and configure.

$ ssh-keygen

Then copy them to the nodes:

$ ssh-copy-id i-000000c2

$ ssh-copy-id i-000000c3

Be sure to make the data directories on all the nodes. In this case:

$ mkdir -p /data/osd.0

$ ssh i-000000c2 'mkdir -p /data/osd.1'

$ ssh i-000000c3 'mkdir -p /data/osd.2'

Then run the mkcephfs command:

$ mkcephfs -a -c /etc/ceph/ceph.conf

And start up the daemons:

$ service ceph start

You should have the daemons running then. If they fail for some reason, they tend to output what the problem was. Also, the logs for the services are in /var/log/ceph

To mount the filesystem, find an ip address of one of the monitors. In my case, I had a monitor on ip address 10.148.2.147. The command to mount is:

$ mkdir -p /mnt/ceph

$ mount -t ceph 10.148.2.147:/ /mnt/ceph

Since you don't have any authentication, it should work without problems.

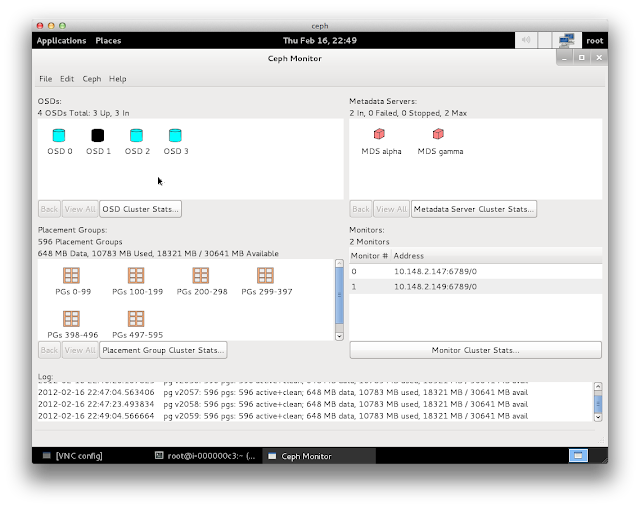

I've had some problems with the different mds, even had a OSD die on me. It resolved itself, and I even added another OSD to take it's place, recreating the CRUSH table. Since creating this, I have even worked with the graphical interface:

And here's a presentation I did about the CEPH Paper. Note, I may not be entirely accurate in the presentation, do be kind.

Leave a comment