Reprocessing CMS events with Bosco

The CMS collaborators at UCSD worked with the San Diego Supercomputing Resource to run the processing on the Gordon supercomputer. Gordon is an XSEDE resource and does not include a traditional OSG Globus Gatekeeper. Also, we did not have root access to the cluster to install a gatekeeper. Therefore, Bosco was used to submit and manage the GlidienWMS Condor glideins to the resource.

|

| Running jobs at Gordon, the SDSC supercomputer |

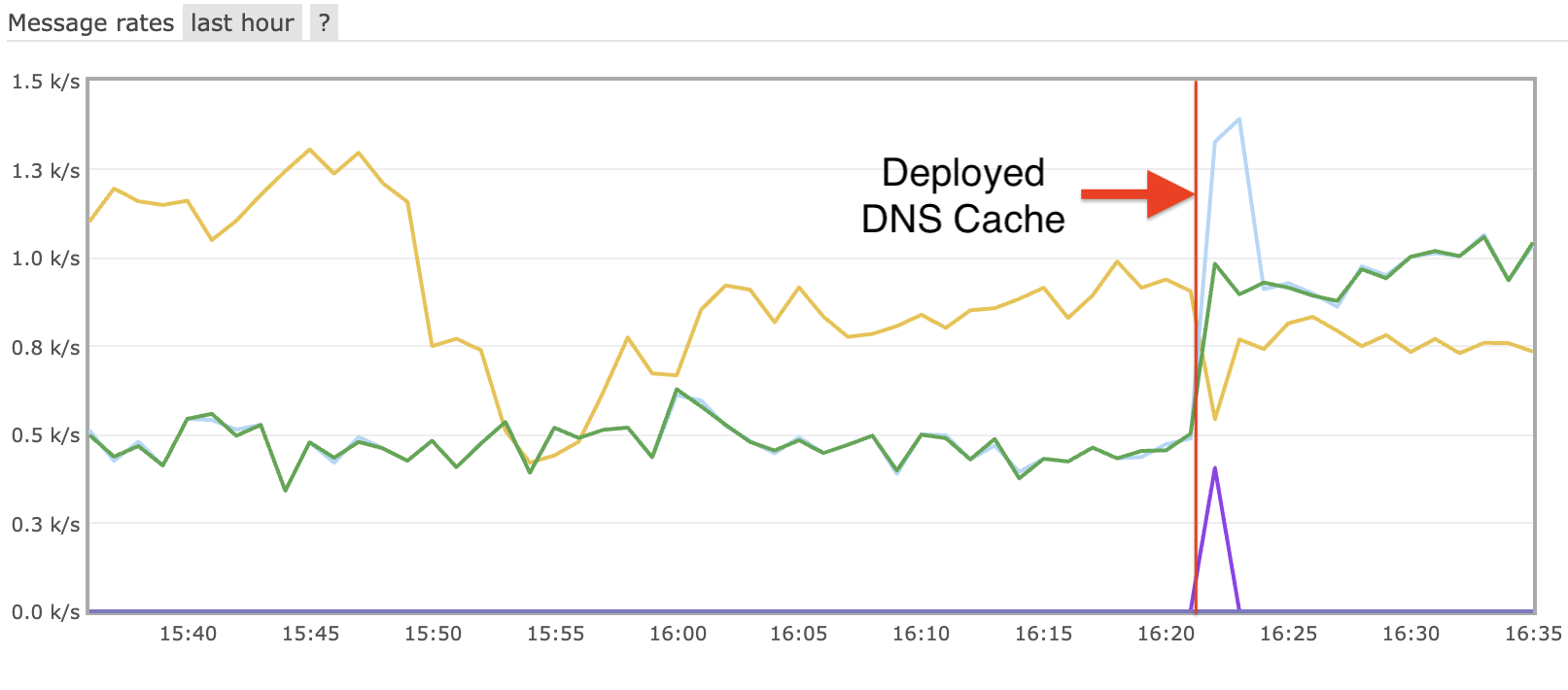

As you can see from the graph, we reached nearly 4,000 CMS processing jobs on Gordon. 4k cores is larger than most CMS Tier 2's, and as big as a European Tier-1. With Bosco, overnight, Gordon became one of the largest CMS clusters in the world.

Full details will be written in a submitted paper to CHEP '13 in Amsterdam, and Bosco will be presented in a poster (and paper) as well. I hope to see you there!

(If I got any details wrong about the CMS side of this run, please let me know. I have intimate knowledge of the Gordon side, but not so much the CMS side).

Leave a comment